The concurrency flag, aka binary semaphore, is the most primitive synchronization structure. It is the basic concurrency building block, from which all manner of more complex synchronization algorithms can be implemented.

A concurrency flag has two states: up or down. It supports two atomic operations: lower and raise. The lower operation is enabled only when the flag is up, in which case it changes the state to down. If lower is invoked when the flag is down, it blocks until the flag is up. The raise operation is only defined when the flag is down, in which case it changes the state to up. It is erroneous to attempt to raise the flag when it is already up. Note the asymmetry: it is never erroneous to invoke lower — the operation will simply block until the flag can be lowered. Raise never blocks, but it should only be invoked when the flag is down.

(The concept of semaphore in concurrency theory is due to Edsker Dijkstra. He used the letter V to represent the raise operation, and P for the lower operation. V stands for verhogen, Dutch for “to raise”. P may stand for passering — “passing” — or probeer te verlagen — “try to decrease”.)

This post looks at the problem of designing a barrier protocol using concurrency flags. A barrier is a synchronization operation involving a set of threads. The basic property of a barrier is that no thread shall leave the barrier until all threads in the set have entered the barrier. A second desirable property is no unnecessary delay — once all threads have entered the barrier, all threads should be able to depart without unnecessary blocking. A third desirable property is reusability — the barrier should be able to be invoked multiple times in a program and work correctly.

It is easy to design a barrier with the first property but not the second: never let any thread leave the barrier. We will see it is actually tricky to design a barrier with all three properties.

The case of two threads is a good place to start. We can implement a 2-thread barrier using two flags, f1 and f2, both initially down. The protocol is

|

1 2 3 |

Thread 1: Thread 2: raise f1; lower f1; lower f2; raise f2; |

|

1 2 3 |

Thread 1: Thread 2: raise f1; lower f1; lower f2; raise f2; |

The first operation that will execute in this protocol is thread 1’s raise f1. After that, thread 1 must block, as f2 is down, but thread2 can now lower f1. Thread 1 is still blocked, so thread 2 raises f2 and now thread 2 may depart the barrier. Thread 1 may now lower f2 and depart.

The basic barrier property holds: if thread 1 arrives first to the barrier, it raises f1 but then blocks at the lower f2 and cannot proceed until thread 2 arrives and eventually raises f2. If thread 2 arrives at the barrier first, it blocks, at the lower f1. The property of no unnecessary delay is admittedly vague, but at a minimum it requires that once every thread arrives, eventually every thread should be able to leave, and that is the case for this protocol. The third property, reusability, also holds — I’ll hold off on a proof of that, but for now observe that at least the flags are returned to their original state after both threads leave the barrier.

It is helpful to think of “raise” as sending a signal, and “lower” as receiving that signal. Then we can view the protocol as follows:

|

1 2 3 |

Thread 1: Thread 2: raise f1; ------------> lower f1; lower f2; <------------ raise f2; |

First thread 1 sends a signal to thread 2. Thread 2 receives the signal and then sends a signal to thread 1. Thread 1 receives the signal.

We can obtain a more rigorous analysis of the protocol by examining its state space. The state space is a directed graph in which the nodes are program states, and an edge represents an atomic transition from one state to another. A state encodes the values of all variables in the program, including the location (program counter) of each thread, and the state of each flag. The reachable state space is the subgraph consisting of the states and transitions that are reachable from the initial state.

Let’s put our 2-thread barrier in a loop, to analyze reusability, and also label the two statements in each thread to provide values for the location:

|

1 2 3 4 5 |

Thread 1: Thread 2: while (true) { while (true) { 0: raise f1; 0: lower f1; 1: lower f2; 1: raise f2; } } |

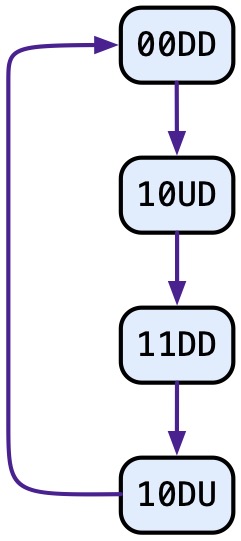

When Thread 1’s location is 0, its next action will be the raise operation, after which it moves to location 1. From location 1 (assuming f2 is up), Thread 1 lowers f2 and moves to location 0, etc. A state of this program may be represented as a tuple [pc1, pc2, f1, f2], where pc1 and pc2 are locations in {0,1}, and f1 and f2 are in {U,D} (U=up, D=down). The initial state is [0,0,D,D]. From that state, only one transition is enabled, Thread 1’s raise of f1, and the resulting state is [1,0,U,D]. Continuing in this way, we obtain the following reachable state space:

The reachable state space shows us that the barrier program is deterministic: at each reachable state, there is one and only one enabled transition. Furthermore, we may interpret location 0 as being “outside” the barrier and location 1 as being “inside” the barrier. The reachable state space also shows that no thread will leave the barrier until both have entered: the leaving transitions are the two transitions that change a 1 to a 0; the entering transitions change a 0 to a 1. The only path from the initial state proceeds with two enters, followed by two exits, repeated forever. This also shows there is no unnecessary delay (or at least all threads eventually leave the barrier), and the barrier is reusable, since we are doing all of this inside a loop. Incidentally we can also check that flags are used correctly, i.e., there is never an attempt to raise a flag that is already up.

One of the first times I showed this 2-flag barrier in a class, a student asked whether both threads could raise first, then lower. I thought about it. The two raises could occur in either order — fine, you end up at the same state in either case. Then the two lowers could happen in either order — also fine. And neither lower could occur without the raise in the other thread occurring first, guaranteeing the basic barrier property. So I said it looked right, but I would check. I went back to the office and thought about it. The program would become:

|

1 2 3 4 5 |

Thread 1: Thread 2: while (true) { while (true) { 0: raise f1; 0: raise f2; 1: lower f2; 1: lower f1; } } |

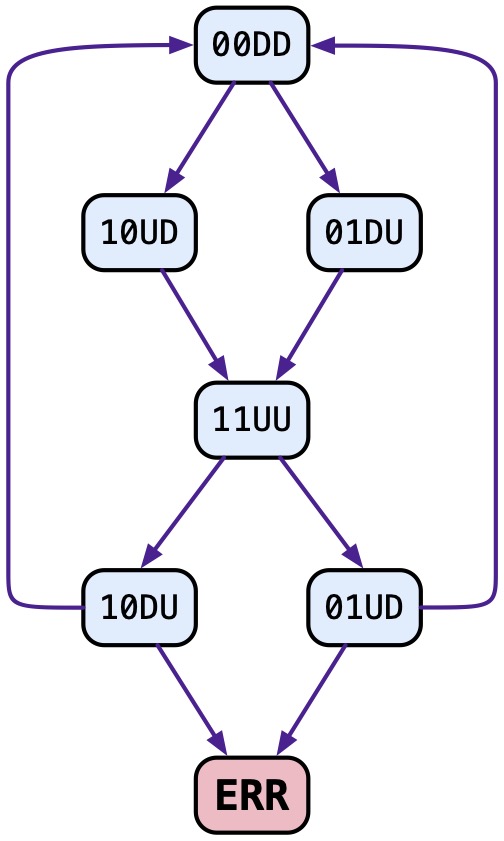

Let’s do the same state-enumeration analysis. This time the reachable state space looks like this:

The problem occurs from a state like [0,1,U,D]. From this state, Thread 2 could lower f1 and return to location 0, but also Thread 1 could attempt to raise f1 when f1 is already up, leading to the ERRor state. In fact, this barrier works correctly once, but it is not reusable: in the erroneous path, Thread 1 races to the second iteration of the loop and attempts to raise f1, while Thread 2 has not yet completed its lower operation in the first iteration. This is the kind of subtle interleaving-dependent issue that makes concurrent programming hard (and fun).

Now let’s see how we can automate this analysis. I will use CIVL, but any standard model checker will do. Here is a CIVL-C model of the first (correct) barrier program:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

typedef _Bool flag_t; flag_t f1=0, f2=0; void raise(flag_t * f) { $assert(!*f); *f = 1; } void lower(flag_t * f) { $when(*f) *f = 0; } void thread1() { while (1) { raise(&f1); lower(&f2); } } void thread2() { while (1) { lower(&f1); raise(&f2); } } int main() { $proc t1 = $spawn thread1(); $proc t2 = $spawn thread2(); $wait(t1); $wait(t2); } |

A flag is just a Boolean variable. The restriction on raise is checked by an assertion. The “when” statement in function lower causes the caller to block until the condition in parentheses is true, then executes the body of the statement; the check that the condition is true and execution of *f = 0 occur atomically, i.e., no other thread executes between those two points.

Running CIVL on this program yields:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

siegel@monk barrier % civl verify barrierGood.cvl CIVL v1.21+ of 2021-11-04 -- http://vsl.cis.udel.edu/civl === Source files === barrierGood.cvl (barrierGood.cvl) === Command === civl verify barrierGood.cvl === Stats === time (s) : 0.5 memory (bytes) : 285212672 max process count : 0 states : 17 states saved : 24 state matches : 1 transitions : 20 trace steps : 17 valid calls : 96 provers : z3 prover calls : 0 === Result === The standard properties hold for all executions. siegel@monk barrier % |

The key part of the output is “The standard properties hold for all executions”, indicating the assertion is never violated. Let’s do the same for the erroneous protocol:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

typedef _Bool flag_t; flag_t f1=0, f2=0; void raise(flag_t * f) { $assert(!*f); *f = 1; } void lower(flag_t * f) { $when(*f) *f = 0; } void thread1() { while (1) { raise(&f1); lower(&f2); } } void thread2() { while (1) { raise(&f2); lower(&f1); } } int main() { $proc t1 = $spawn thread1(); $proc t2 = $spawn thread2(); $wait(t1); $wait(t2); } |

Checking…

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

siegel@monk barrier % civl verify barrierBad.cvl CIVL v1.21+ of 2021-11-04 -- http://vsl.cis.udel.edu/civl Violation 0 encountered at depth 15: CIVL execution violation in p1 (kind: ASSERTION_VIOLATION, certainty: PROVEABLE) at barrierBad.cvl:7.2-13 $assert(!*f); ^^^^^^^^^^^^ Assertion: !(*(f)) -> !*&<d0>f1 -> !true -> false Context: true Call stacks: process 0: main@4 barrierBad.cvl:32.2-6 "$wait" process 1: raise@7 barrierBad.cvl:7.2-8 "$assert" called from thread1@13 barrierBad.cvl:17.4-8 "raise" process 2: lower@10 barrierBad.cvl:12.2-6 "$when" called from thread2@18 barrierBad.cvl:25.4-8 "lower" Logging new entry 0, writing trace to CIVLREP/barrierBad_0.trace Terminating search after finding 1 violation. === Source files === barrierBad.cvl (barrierBad.cvl) === Command === civl verify barrierBad.cvl === Stats === time (s) : 0.49 memory (bytes) : 285212672 max process count : 0 states : 15 states saved : 21 state matches : 0 transitions : 18 trace steps : 14 valid calls : 71 provers : z3 prover calls : 0 === Result === The program MAY NOT be correct. See CIVLREP/barrierBad_log.txt |

The output indicates the assertion is violated and shows that process 1 (i.e., Thread 1) is in the “raise” call while process 2 is just before the lower. We can see more details, including a blow-by-blow account of the execution that arrived at that point, as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 |

siegel@monk barrier % civl replay -showTransitions barrierBad.cvl CIVL v1.21+ of 2021-11-04 -- http://vsl.cis.udel.edu/civl Initial state: State (id=9) | Path condition | | true | Dynamic scopes | | dyscope d0 (parent=NULL, static=1) | | | variables | | | | f1 = NULL | | | | f2 = NULL | Process states | | process 0 | | | call stack | | | | Frame[function=main, location=0, barrierBad.cvl:4.0-16 "flag_t f1=0, f2=0", dyscope=d0] Executed by p0 from State (id=9) 0->1: f1=false at barrierBad.cvl:4.0-16 "flag_t f1=0, f2=0" 1->2: f2=false at barrierBad.cvl:4.0-16 "flag_t f1=0, f2=0" 2->3: t1=$spawn thread1() [t1:=p1] at barrierBad.cvl:30.2-28 "$proc t1 = $spawn thread1 ... )" 3->4: t2=$spawn thread2() [t2:=p2] at barrierBad.cvl:31.2-28 "$proc t2 = $spawn thread2 ... )" --> State (id=24) Step 1: Executed by p1 from State (id=24) 12->13: LOOP_BODY_ENTER (guard: 1!=0) [$assume(true)] at barrierBad.cvl:16.9 "1" --> State (id=26) Step 2: Executed by p1 from State (id=26) 13->7: raise(&<d0>f1) at barrierBad.cvl:17.4-13 "raise(&f1)" --> State (id=29) Step 3: Executed by p2 from State (id=29) 16->17: LOOP_BODY_ENTER (guard: 1!=0) [$assume(true)] at barrierBad.cvl:23.9 "1" --> State (id=31) Step 4: Executed by p2 from State (id=31) 17->7: raise(&<d0>f2) at barrierBad.cvl:24.4-13 "raise(&f2)" --> State (id=34) Step 5: Executed by p2 from State (id=34) 7->8: $assert(!*&<d0>f2) at barrierBad.cvl:7.2-13 "$assert(!*f)" --> State (id=36) Step 6: Executed by p1 from State (id=36) 7->8: $assert(!*&<d0>f1) at barrierBad.cvl:7.2-13 "$assert(!*f)" --> State (id=38) Step 7: Executed by p1 from State (id=38) 8->9: *(&<d0>f1)=true at barrierBad.cvl:8.2-7 "*f = 1" 9->RET: raise(...) return at barrierBad.cvl:9.0 "}" --> State (id=43) Step 8: Executed by p1 from State (id=43) 14->10: lower(&<d0>f2) at barrierBad.cvl:18.4-13 "lower(&f2)" --> State (id=46) Step 9: Executed by p2 from State (id=46) 8->9: *(&<d0>f2)=true at barrierBad.cvl:8.2-7 "*f = 1" 9->RET: raise(...) return at barrierBad.cvl:9.0 "}" --> State (id=51) Step 10: Executed by p2 from State (id=51) 18->10: lower(&<d0>f1) at barrierBad.cvl:25.4-13 "lower(&f1)" --> State (id=54) Step 11: Executed by p1 from State (id=54) 10->11: *(&<d0>f2)=false at barrierBad.cvl:12.12-17 "*f = 0" 11->RET: lower(...) return at barrierBad.cvl:13.0 "}" --> State (id=59) Step 12: Executed by p1 from State (id=59) 12->13: LOOP_BODY_ENTER (guard: 1!=0) [$assume(true)] at barrierBad.cvl:16.9 "1" --> State (id=61) Step 13: Executed by p1 from State (id=61) 13->7: raise(&<d0>f1) at barrierBad.cvl:17.4-13 "raise(&f1)" --> State (id=64) Step 14: Error 0: CIVL execution violation in p1 (kind: ASSERTION_VIOLATION, certainty: PROVEABLE) at barrierBad.cvl:7.2-13 $assert(!*f); ^^^^^^^^^^^^ Assertion: !(*(f)) -> !*&<d0>f1 -> !true -> false Context: true Call stacks: process 0: main@4 barrierBad.cvl:32.2-6 "$wait" process 1: raise@7 barrierBad.cvl:7.2-8 "$assert" called from thread1@13 barrierBad.cvl:17.4-8 "raise" process 2: lower@10 barrierBad.cvl:12.2-6 "$when" called from thread2@18 barrierBad.cvl:25.4-8 "lower" --> State (id=66) Step 15: Trace ends after 15 trace steps. Violation(s) found. === Source files === barrierBad.cvl (barrierBad.cvl) === Command === civl replay -showTransitions barrierBad.cvl === Stats === time (s) : 0.44 memory (bytes) : 285212672 max process count : 0 states : 16 valid calls : 72 provers : z3 prover calls : 0 |

That, in a nutshell, is how you can use a model checker to verify, or find a bug in, a concurrent algorithm. And if you are a teacher looking for examples of simple “truthy” concurrent protocols with subtle bugs, you can’t do much better than this erroneous 2-thread barrier.

This is such a great example. So simple yet so effective at displaying the subtleties of concurrency (and how model checking can help)!

I wonder if you could formalize the second property of “no unnecessary delays” as something like “once every thread has entered the barrier, each thread will block O(f(N)) times before leaving the barrier” where N is the number of participating threads and f is some “small” function like ln(N). Of course in this post we are only considering 2 threads so this would only make sense if you are talking about barriers that accept any number of threads.